involution:大家一起内卷起来吧

本文介绍用Layer方式搭建involution算子,以此魔改ResNet打造RedNet模型,已加入【Paddle-Image-Models】项目,含转换后的最新预训练参数,精度基本对齐。还展示了算子和模型的搭建代码、测试情况及精度验证结果,RedNet性能和效率优于ResNet等模型。

引入

真·内卷无处不在,现在神经网络也能内卷了这次项目就用 Layer 的方式搭建一下 involution 算子,并且使用这个算子参照论文所述魔改一下 ResNet 打造一个新模型 RedNet当然这个模型也已经添加到了 【Paddle-Image-Models】 项目中了,包含转换之后的最新预训练参数,精度基本对齐好让大家能够尽快在神经网络里面内卷起来相关资料

论文:【Involution: Inverting the Inherence of Convolution for Visual Recognition】

代码:【d-li14/involution】

论文概要

提出了一种新的神经网络算子(operator或op)称为 involution,它比 convolution 更轻量更高效,形式上比 self-attention 更加简洁,可以用在各种视觉任务的模型上取得精度和效率的双重提升。通过 involution 的结构设计,我们能够以统一的视角来理解经典的卷积操作和近来流行的自注意力操作。算子和模型搭建

导入必要的包

In [1]import paddleimport paddle.nn as nnfrom paddle.vision.models import resnet登录后复制

involution(内卷)

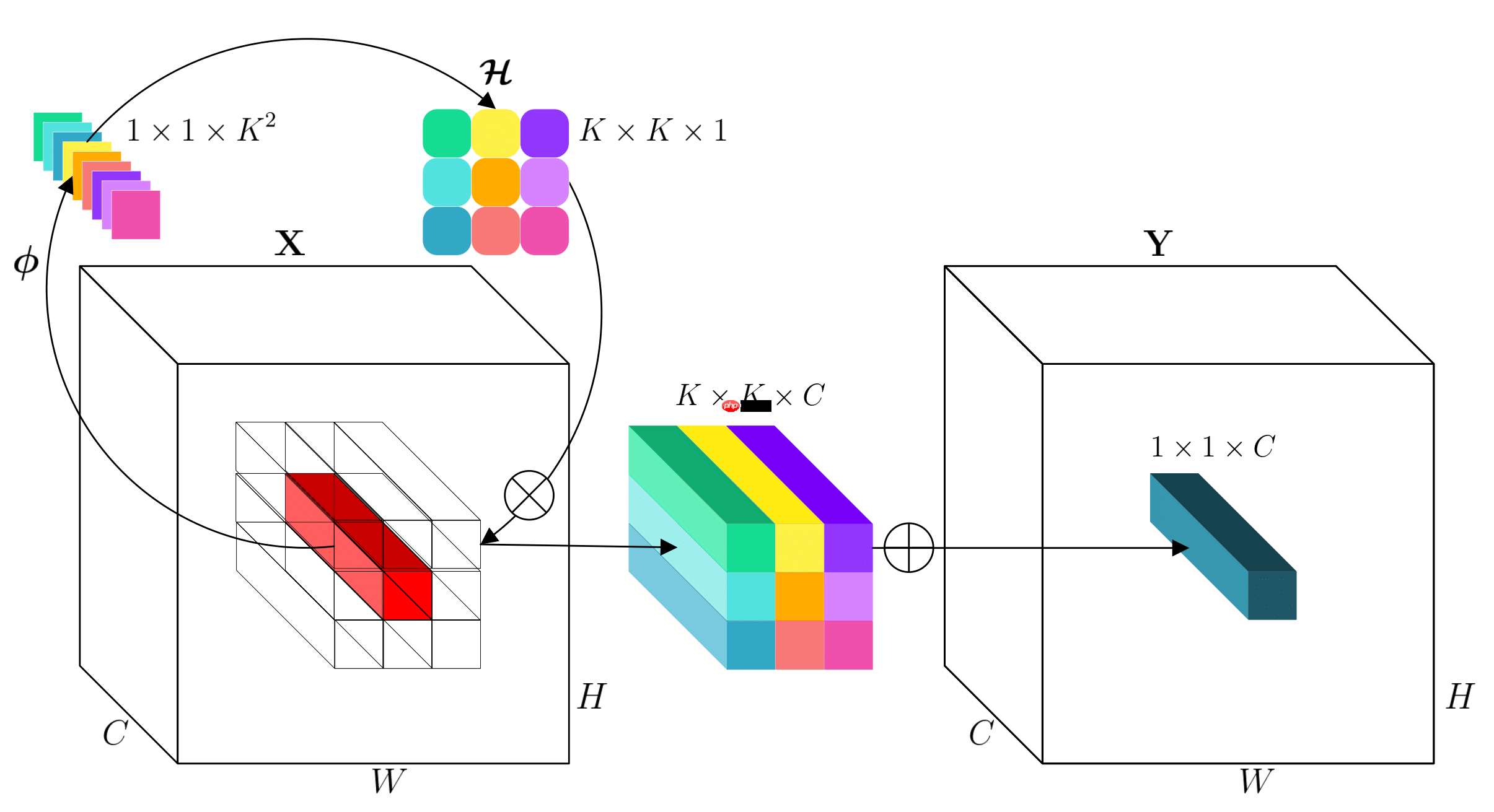

针对输入 feature map 的一个坐标点上的特征向量:先通过 (FC-BN-ReLU-FC) 和 reshape (channel-to-space) 变换展开成 kernel 的形状从而得到这个坐标点上对应的 involution kernel再和输入 feature map 上这个坐标点邻域的特征向量进行 Multiply-Add 得到最终输出的 feature mapinvolution 示意图如下: In [2]

In [2]class involution(nn.Layer): def __init__(self, channels, kernel_size, stride): super(involution, self).__init__() self.kernel_size = kernel_size self.stride = stride self.channels = channels reduction_ratio = 4 self.group_channels = 16 self.groups = self.channels // self.group_channels self.conv1 = nn.Sequential( ('conv', nn.Conv2D( in_channels=channels, out_channels=channels // reduction_ratio, kernel_size=1, bias_attr=False )), ('bn', nn.BatchNorm2D(channels // reduction_ratio)), ('activate', nn.ReLU()) ) self.conv2 = nn.Sequential( ('conv', nn.Conv2D( in_channels=channels // reduction_ratio, out_channels=kernel_size**2 * self.groups, kernel_size=1, stride=1)) ) if stride > 1: self.avgpool = nn.AvgPool2D(stride, stride) def forward(self, x): weight = self.conv2(self.conv1( x if self.stride == 1 else self.avgpool(x))) b, c, h, w = weight.shape weight = weight.reshape(( b, self.groups, self.kernel_size**2, h, w)).unsqueeze(2) out = nn.functional.unfold( x, self.kernel_size, strides=self.stride, paddings=(self.kernel_size-1)//2, dilations=1) out = out.reshape( (b, self.groups, self.group_channels, self.kernel_size**2, h, w)) out = (weight * out).sum(axis=3).reshape((b, self.channels, h, w)) return out登录后复制 算子测试

In [3]inv = involution(128, 7, 1)paddle.summary(inv, (1, 128, 64, 64))out = inv(paddle.randn((1, 128, 64, 64)))print(out.shape)登录后复制

--------------------------------------------------------------------------- Layer (type) Input Shape Output Shape Param # =========================================================================== Conv2D-1 [[1, 128, 64, 64]] [1, 32, 64, 64] 4,096 BatchNorm2D-1 [[1, 32, 64, 64]] [1, 32, 64, 64] 128 ReLU-1 [[1, 32, 64, 64]] [1, 32, 64, 64] 0 Conv2D-2 [[1, 32, 64, 64]] [1, 392, 64, 64] 12,936 ===========================================================================Total params: 17,160Trainable params: 17,032Non-trainable params: 128---------------------------------------------------------------------------Input size (MB): 2.00Forward/backward pass size (MB): 15.25Params size (MB): 0.07Estimated Total Size (MB): 17.32---------------------------------------------------------------------------[1, 128, 64, 64]登录后复制

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:648: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance.")登录后复制

RedNet

使用 involution 替换 ResNet BottleneckBlock 中的 3x3 convolution 得到了一族新的骨干网络 RedNet性能和效率优于 ResNet 和其他 self-attention 做 op 的 SOTA 模型模型具体信息如下:In [4]class BottleneckBlock(resnet.BottleneckBlock): def __init__(self, inplanes, planes, stride=1, downsample=None, groups=1, base_width=64, dilation=1, norm_layer=None): super(BottleneckBlock, self).__init__(inplanes, planes, stride, downsample, groups, base_width, dilation, norm_layer) width = int(planes * (base_width / 64.)) * groups self.conv2 = involution(width, 7, stride) class RedNet(resnet.ResNet): def __init__(self, block, depth, num_classes=1000, with_pool=True): super(RedNet, self).__init__(block=block, depth=50, num_classes=num_classes, with_pool=with_pool) layer_cfg = { 26: [1, 2, 4, 1], 38: [2, 3, 5, 2], 50: [3, 4, 6, 3], 101: [3, 4, 23, 3], 152: [3, 8, 36, 3] } layers = layer_cfg[depth] self.conv1 = None self.bn1 = None self.relu = None self.inplanes = 64 self.stem = nn.Sequential( nn.Sequential( ('conv', nn.Conv2D( in_channels=3, out_channels=self.inplanes // 2, kernel_size=3, stride=2, padding=1, bias_attr=False )), ('bn', nn.BatchNorm2D(self.inplanes // 2)), ('activate', nn.ReLU()) ), involution(self.inplanes // 2, 3, 1), nn.BatchNorm2D(self.inplanes // 2), nn.ReLU(), nn.Sequential( ('conv', nn.Conv2D( in_channels=self.inplanes // 2, out_channels=self.inplanes, kernel_size=3, stride=1, padding=1, bias_attr=False )), ('bn', nn.BatchNorm2D(self.inplanes)), ('activate', nn.ReLU()) ) ) self.layer1 = self._make_layer(block, 64, layers[0]) self.layer2 = self._make_layer(block, 128, layers[1], stride=2) self.layer3 = self._make_layer(block, 256, layers[2], stride=2) self.layer4 = self._make_layer(block, 512, layers[3], stride=2) def forward(self, x): x = self.stem(x) x = self.maxpool(x) x = self.layer1(x) x = self.layer2(x) x = self.layer3(x) x = self.layer4(x) if self.with_pool: x = self.avgpool(x) if self.num_classes > 0: x = paddle.flatten(x, 1) x = self.fc(x) return x登录后复制 模型测试

In [5]model = RedNet(BottleneckBlock, 26)paddle.summary(model, (1, 3, 224, 224))out = model(paddle.randn((1, 3, 224, 224)))print(out.shape)登录后复制

------------------------------------------------------------------------------- Layer (type) Input Shape Output Shape Param # =============================================================================== Conv2D-88 [[1, 3, 224, 224]] [1, 32, 112, 112] 864 BatchNorm2D-71 [[1, 32, 112, 112]] [1, 32, 112, 112] 128 ReLU-35 [[1, 32, 112, 112]] [1, 32, 112, 112] 0 Conv2D-89 [[1, 32, 112, 112]] [1, 8, 112, 112] 256 BatchNorm2D-72 [[1, 8, 112, 112]] [1, 8, 112, 112] 32 ReLU-36 [[1, 8, 112, 112]] [1, 8, 112, 112] 0 Conv2D-90 [[1, 8, 112, 112]] [1, 18, 112, 112] 162 involution-18 [[1, 32, 112, 112]] [1, 32, 112, 112] 0 BatchNorm2D-73 [[1, 32, 112, 112]] [1, 32, 112, 112] 128 ReLU-37 [[1, 32, 112, 112]] [1, 32, 112, 112] 0 Conv2D-91 [[1, 32, 112, 112]] [1, 64, 112, 112] 18,432 BatchNorm2D-74 [[1, 64, 112, 112]] [1, 64, 112, 112] 256 ReLU-38 [[1, 64, 112, 112]] [1, 64, 112, 112] 0 MaxPool2D-1 [[1, 64, 112, 112]] [1, 64, 56, 56] 0 Conv2D-93 [[1, 64, 56, 56]] [1, 64, 56, 56] 4,096 BatchNorm2D-76 [[1, 64, 56, 56]] [1, 64, 56, 56] 256 ReLU-39 [[1, 256, 56, 56]] [1, 256, 56, 56] 0 Conv2D-96 [[1, 64, 56, 56]] [1, 16, 56, 56] 1,024 BatchNorm2D-79 [[1, 16, 56, 56]] [1, 16, 56, 56] 64 ReLU-40 [[1, 16, 56, 56]] [1, 16, 56, 56] 0 Conv2D-97 [[1, 16, 56, 56]] [1, 196, 56, 56] 3,332 involution-19 [[1, 64, 56, 56]] [1, 64, 56, 56] 0 BatchNorm2D-77 [[1, 64, 56, 56]] [1, 64, 56, 56] 256 Conv2D-95 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384 BatchNorm2D-78 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024 Conv2D-92 [[1, 64, 56, 56]] [1, 256, 56, 56] 16,384 BatchNorm2D-75 [[1, 256, 56, 56]] [1, 256, 56, 56] 1,024 BottleneckBlock-17 [[1, 64, 56, 56]] [1, 256, 56, 56] 0 Conv2D-99 [[1, 256, 56, 56]] [1, 128, 56, 56] 32,768 BatchNorm2D-81 [[1, 128, 56, 56]] [1, 128, 56, 56] 512 ReLU-41 [[1, 512, 28, 28]] [1, 512, 28, 28] 0 AvgPool2D-4 [[1, 128, 56, 56]] [1, 128, 28, 28] 0 Conv2D-102 [[1, 128, 28, 28]] [1, 32, 28, 28] 4,096 BatchNorm2D-84 [[1, 32, 28, 28]] [1, 32, 28, 28] 128 ReLU-42 [[1, 32, 28, 28]] [1, 32, 28, 28] 0 Conv2D-103 [[1, 32, 28, 28]] [1, 392, 28, 28] 12,936 involution-20 [[1, 128, 56, 56]] [1, 128, 28, 28] 0 BatchNorm2D-82 [[1, 128, 28, 28]] [1, 128, 28, 28] 512 Conv2D-101 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536 BatchNorm2D-83 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048 Conv2D-98 [[1, 256, 56, 56]] [1, 512, 28, 28] 131,072 BatchNorm2D-80 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048 BottleneckBlock-18 [[1, 256, 56, 56]] [1, 512, 28, 28] 0 Conv2D-104 [[1, 512, 28, 28]] [1, 128, 28, 28] 65,536 BatchNorm2D-85 [[1, 128, 28, 28]] [1, 128, 28, 28] 512 ReLU-43 [[1, 512, 28, 28]] [1, 512, 28, 28] 0 Conv2D-107 [[1, 128, 28, 28]] [1, 32, 28, 28] 4,096 BatchNorm2D-88 [[1, 32, 28, 28]] [1, 32, 28, 28] 128 ReLU-44 [[1, 32, 28, 28]] [1, 32, 28, 28] 0 Conv2D-108 [[1, 32, 28, 28]] [1, 392, 28, 28] 12,936 involution-21 [[1, 128, 28, 28]] [1, 128, 28, 28] 0 BatchNorm2D-86 [[1, 128, 28, 28]] [1, 128, 28, 28] 512 Conv2D-106 [[1, 128, 28, 28]] [1, 512, 28, 28] 65,536 BatchNorm2D-87 [[1, 512, 28, 28]] [1, 512, 28, 28] 2,048 BottleneckBlock-19 [[1, 512, 28, 28]] [1, 512, 28, 28] 0 Conv2D-110 [[1, 512, 28, 28]] [1, 256, 28, 28] 131,072 BatchNorm2D-90 [[1, 256, 28, 28]] [1, 256, 28, 28] 1,024 ReLU-45 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0 AvgPool2D-5 [[1, 256, 28, 28]] [1, 256, 14, 14] 0 Conv2D-113 [[1, 256, 14, 14]] [1, 64, 14, 14] 16,384 BatchNorm2D-93 [[1, 64, 14, 14]] [1, 64, 14, 14] 256 ReLU-46 [[1, 64, 14, 14]] [1, 64, 14, 14] 0 Conv2D-114 [[1, 64, 14, 14]] [1, 784, 14, 14] 50,960 involution-22 [[1, 256, 28, 28]] [1, 256, 14, 14] 0 BatchNorm2D-91 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024 Conv2D-112 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144 BatchNorm2D-92 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096 Conv2D-109 [[1, 512, 28, 28]] [1, 1024, 14, 14] 524,288 BatchNorm2D-89 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096 BottleneckBlock-20 [[1, 512, 28, 28]] [1, 1024, 14, 14] 0 Conv2D-115 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144 BatchNorm2D-94 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024 ReLU-47 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0 Conv2D-118 [[1, 256, 14, 14]] [1, 64, 14, 14] 16,384 BatchNorm2D-97 [[1, 64, 14, 14]] [1, 64, 14, 14] 256 ReLU-48 [[1, 64, 14, 14]] [1, 64, 14, 14] 0 Conv2D-119 [[1, 64, 14, 14]] [1, 784, 14, 14] 50,960 involution-23 [[1, 256, 14, 14]] [1, 256, 14, 14] 0 BatchNorm2D-95 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024 Conv2D-117 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144 BatchNorm2D-96 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096 BottleneckBlock-21 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0 Conv2D-120 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144 BatchNorm2D-98 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024 ReLU-49 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0 Conv2D-123 [[1, 256, 14, 14]] [1, 64, 14, 14] 16,384 BatchNorm2D-101 [[1, 64, 14, 14]] [1, 64, 14, 14] 256 ReLU-50 [[1, 64, 14, 14]] [1, 64, 14, 14] 0 Conv2D-124 [[1, 64, 14, 14]] [1, 784, 14, 14] 50,960 involution-24 [[1, 256, 14, 14]] [1, 256, 14, 14] 0 BatchNorm2D-99 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024 Conv2D-122 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144 BatchNorm2D-100 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096 BottleneckBlock-22 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0 Conv2D-125 [[1, 1024, 14, 14]] [1, 256, 14, 14] 262,144 BatchNorm2D-102 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024 ReLU-51 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0 Conv2D-128 [[1, 256, 14, 14]] [1, 64, 14, 14] 16,384 BatchNorm2D-105 [[1, 64, 14, 14]] [1, 64, 14, 14] 256 ReLU-52 [[1, 64, 14, 14]] [1, 64, 14, 14] 0 Conv2D-129 [[1, 64, 14, 14]] [1, 784, 14, 14] 50,960 involution-25 [[1, 256, 14, 14]] [1, 256, 14, 14] 0 BatchNorm2D-103 [[1, 256, 14, 14]] [1, 256, 14, 14] 1,024 Conv2D-127 [[1, 256, 14, 14]] [1, 1024, 14, 14] 262,144 BatchNorm2D-104 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 4,096 BottleneckBlock-23 [[1, 1024, 14, 14]] [1, 1024, 14, 14] 0 Conv2D-131 [[1, 1024, 14, 14]] [1, 512, 14, 14] 524,288 BatchNorm2D-107 [[1, 512, 14, 14]] [1, 512, 14, 14] 2,048 ReLU-53 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 0 AvgPool2D-6 [[1, 512, 14, 14]] [1, 512, 7, 7] 0 Conv2D-134 [[1, 512, 7, 7]] [1, 128, 7, 7] 65,536 BatchNorm2D-110 [[1, 128, 7, 7]] [1, 128, 7, 7] 512 ReLU-54 [[1, 128, 7, 7]] [1, 128, 7, 7] 0 Conv2D-135 [[1, 128, 7, 7]] [1, 1568, 7, 7] 202,272 involution-26 [[1, 512, 14, 14]] [1, 512, 7, 7] 0 BatchNorm2D-108 [[1, 512, 7, 7]] [1, 512, 7, 7] 2,048 Conv2D-133 [[1, 512, 7, 7]] [1, 2048, 7, 7] 1,048,576 BatchNorm2D-109 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192 Conv2D-130 [[1, 1024, 14, 14]] [1, 2048, 7, 7] 2,097,152 BatchNorm2D-106 [[1, 2048, 7, 7]] [1, 2048, 7, 7] 8,192 BottleneckBlock-24 [[1, 1024, 14, 14]] [1, 2048, 7, 7] 0 AdaptiveAvgPool2D-1 [[1, 2048, 7, 7]] [1, 2048, 1, 1] 0 Linear-1 [[1, 2048]] [1, 1000] 2,049,000 ===============================================================================Total params: 9,264,318Trainable params: 9,202,014Non-trainable params: 62,304-------------------------------------------------------------------------------Input size (MB): 0.57Forward/backward pass size (MB): 188.62Params size (MB): 35.34Estimated Total Size (MB): 224.53-------------------------------------------------------------------------------[1, 1000]登录后复制

模型精度验证

使用 Paddle-Image-Models 来进行模型精度验证安装 PPIM

In [ ]!pip install ppim==1.0.1 -i https://pypi.python.org/pypi登录后复制

解压数据集

解压 ILSVRC2012 验证集In [ ]# 解压数据集!mkdir ~/data/ILSVRC2012!tar -xf ~/data/data68594/ILSVRC2012_img_val.tar -C ~/data/ILSVRC2012登录后复制

模型评估

使用 ILSVRC2012 验证集进行精度验证In [ ]import osimport cv2import numpy as npimport paddleimport paddle.vision.transforms as Tfrom ppim import rednet26, rednet38, rednet50, rednet101, rednet152# 构建数据集# backend cv2class ILSVRC2012(paddle.io.Dataset): def __init__(self, root, label_list, transform): self.transform = transform self.root = root self.label_list = label_list self.load_datas() def load_datas(self): self.imgs = [] self.labels = [] with open(self.label_list, 'r') as f: for line in f: img, label = line[:-1].split(' ') self.imgs.append(os.path.join(self.root, img)) self.labels.append(int(label)) def __getitem__(self, idx): label = self.labels[idx] image = self.imgs[idx] image = cv2.imread(image) image = self.transform(image) return image.astype('float32'), np.array(label).astype('int64') def __len__(self): return len(self.imgs)# 配置模型model, val_transforms = rednet26(pretrained=True)model = paddle.Model(model)model.prepare(metrics=paddle.metric.Accuracy(topk=(1, 5)))# 配置数据集val_dataset = ILSVRC2012('data/ILSVRC2012', transform=val_transforms, label_list='data/data68594/val_list.txt')# 模型验证model.evaluate(val_dataset, batch_size=16)登录后复制 {'acc_top1': 0.75956, 'acc_top5': 0.9319}登录后复制 免责声明

游乐网为非赢利性网站,所展示的游戏/软件/文章内容均来自于互联网或第三方用户上传分享,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系youleyoucom@outlook.com。

同类文章

国产垂类AI如何突破?谷歌"香蕉"爆火启示

最近,一根香蕉打破了AI圈的平静。 最初可能只是朋友圈病毒式传播的手办图,但随后事情似乎向着疯狂的方向发展,上线不到两周,谷歌旗下的Nano Banana已在全球生产超2亿张图片,亚太地区用户热情

2029年农用机器人市场规模将突破200亿,极飞引领智慧农业发展

广州极飞科技股份有限公司近日正式向港交所递交上市申请,华泰国际担任此次上市的独家保荐人。这并非极飞科技首次尝试登陆资本市场,早在2024年11月,该公司曾向上交所科创板提出上市申请,由申万宏源证券承

OpenAI实测:最强打工AI评测,榜首竟是它

OpenAI发布最新研究,却在里面夸了一波Claude。 他们提出名为GDPval的新基准,用来衡量AI模型在真实世界具有经济价值的任务上的表现。 具体来说,GDPval覆盖了对美国GDP贡献最

AI技术落地难?场景深耕才能释放真实产业价值

全球科技领域正掀起一场围绕人工智能(AI)的激烈角逐。英伟达与OpenAI达成千亿美元级合作协议,阿里巴巴宣布投入3800亿元布局AI生态,meta则计划在未来数年内斥资至少6000亿美元完善AI基

摩尔线程80亿科创板募资创纪录,仅88天火速过会

上交所近日发布公告,摩尔线程智能科技(北京)股份有限公司以88天的审核周期刷新科创板过会纪录,成为该板块设立以来最快过会企业。这家成立仅五年的GPU初创公司,同步启动80亿元规模的A股半导体行业最大

相关攻略

热门教程

更多- 游戏攻略

- 安卓教程

- 苹果教程

- 电脑教程