SCNet:自校正卷积网络,无复杂度增加换来性能提升

本指南将从PATH的基本概念出发,逐步深入讲解其工作原理、配置方法及安全实践,帮助你全面掌握这一Linux系统的关键组件。

当你在终端中输入ls就能列出文件时,背后的功臣正是PATH环境变量。这个看似简单的配置项,实则是Linux系统中命令执行机制的核心枢纽。本指南将从PATH的基本概念出发,逐步深入讲解其工作原理、配置方法及安全实践,帮助你全面掌握这一Linux系统的关键组件。

一、PATH的核心概念与工作机制

1. PATH的本质与作用

PATH环境变量本质上是一个由冒号分隔的目录列表,它告诉shell当输入命令时应该到哪些目录中查找可执行文件。例如,当PATH包含/usr/bin:/bin时,输入ls命令,shell会依次在这两个目录中查找名为ls的可执行文件。

这种设计带来了极大的便利性:无需每次输入命令的完整路径(如/usr/bin/ls),大幅提升了命令行操作的效率。但同时,PATH的配置也直接影响系统安全,错误的配置可能导致恶意程序被执行。

2. shell的命令搜索策略

从左到右的搜索顺序:shell会严格按照PATH中目录的排列顺序依次查找,一旦找到匹配的可执行文件就会立即执行,不再继续搜索后续目录。绝对路径的优先级:当输入命令包含斜杠(如/usr/bin/python)时,shell会直接执行该路径下的文件,完全忽略PATH设置。相对路径的处理:若PATH中包含空目录(如::),shell会将其视为当前目录(.),这可能引发安全风险。二、查看与分析当前PATH配置

在修改PATH之前,了解当前配置是必要的准备工作。Linux提供了多种查看PATH的方式:

1. 最简洁的查看方式echo $PATH 示例输出:/usr/local/bin:/usr/bin:/bin:/usr/sbin:/sbin 2. 专门用于查看环境变量printenv PATH 3. 查看包含PATH的所有shell变量set | grep PATH 4. 查看系统环境变量中的PATHenv | grep PATH

速查表:PATH查看命令对比

三、灵活配置PATH:临时与永久修改

1. 临时修改:仅在当前会话生效

临时修改适用于测试场景或临时使用特定目录中的程序,关闭终端后配置即失效。

追加目录到PATH(优先级较低)export PATH="$PATH:/path/to/new/dir" 前置目录到PATH(优先级最高)export PATH="/path/to/new/dir:$PATH" 实践案例:添加个人脚本目录mkdir ~/scripts # 创建脚本目录export PATH="$PATH:$HOME/scripts" # 添加到PATHecho

免责声明

游乐网为非赢利性网站,所展示的游戏/软件/文章内容均来自于互联网或第三方用户上传分享,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系youleyoucom@outlook.com。

最新文章

估值翻倍用时约 15 个月:法 AI 企业 Mistral 新融资轮中估值达 120 亿欧元

9 月 5 日消息,彭博社北京时间 4 日早晨表示,法国人工智能初创企业 Mistral AI 即将以 120 亿欧元(注:现汇率约合 998 44 亿元人民币)的估值完成新一轮 20 亿欧元金额

飞利浦 Hue 发布 Bridge Pro 智能桥接器:芯片性能强 5 倍,支持连接 150 盏灯

9 月 5 日消息,科技媒体 MacRumors 昨日(9 月 4 日)发布博文,报道称 Signify 发布飞利浦 Hue 系列多款秋季新品,包括支持 150 个设备的 Hue Bridge P

通义千问系列最强大的语言模型:Qwen3-Max-Preview 上线

9 月 5 日消息,阿里通义千问今日在正式和 OpenRouter 上线了最新的 Qwen-3-Max-Preview 模型。根据正式描述,该模型是通义千问系列中最强大的语言模型。附有关地址如下:

腾讯混元游戏 2.0 发布:图片秒变动画 / CG,全面开放使用

9 月 5 日消息,腾讯今日官宣,“混元游戏”(腾讯混元游戏视觉生成平台)发布全新 2 0 版本,新增游戏图生视频、自定义模型训练、角色一键精修等能力,并大幅提升游戏 2D 生图模型能力,图生视频

OpenAI 明年杀入招聘市场,将帮助具备 AI 技能的人才找到工作

9 月 5 日消息,据彭博社今日报道,OpenAI 计划明年推出一款 AI 驱动的全新招聘平台,旨在帮助雇主寻找具备人工智能技能的人才,从而加速 AI 在企业和政府机构的应用。OpenAI 还将在

热门推荐

热门教程

更多- 游戏攻略

- 安卓教程

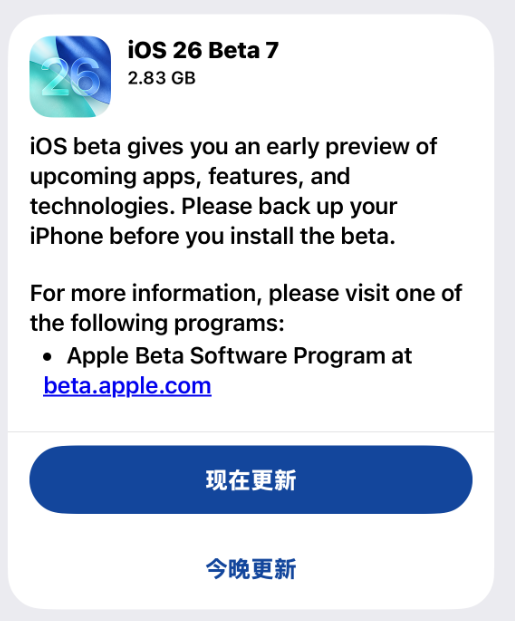

- 苹果教程

- 电脑教程